People like to talk about how everything you do online stays there forever. If you post the wrong photo, or tweet something ill-advised, it'll come back to haunt you.

I'm not sure that's what we should be worried about. I'm not haunted by anything specific I've said or done online. I am haunted by the people I used to be.

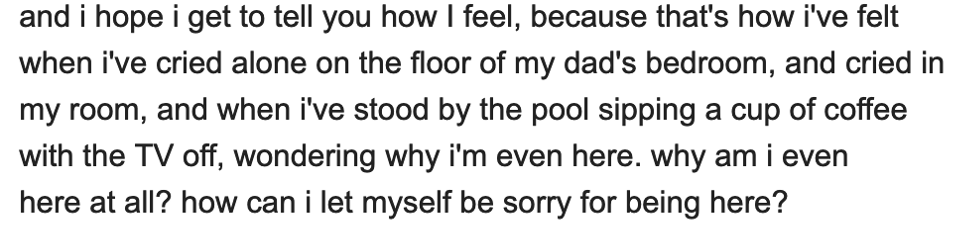

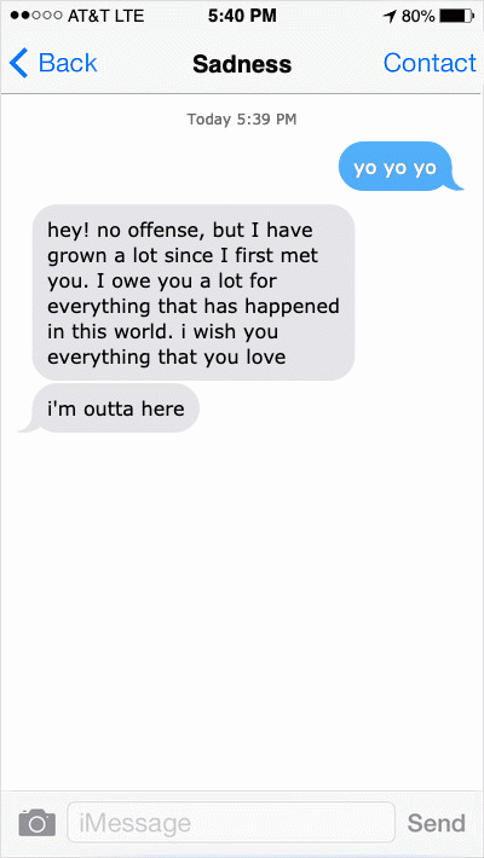

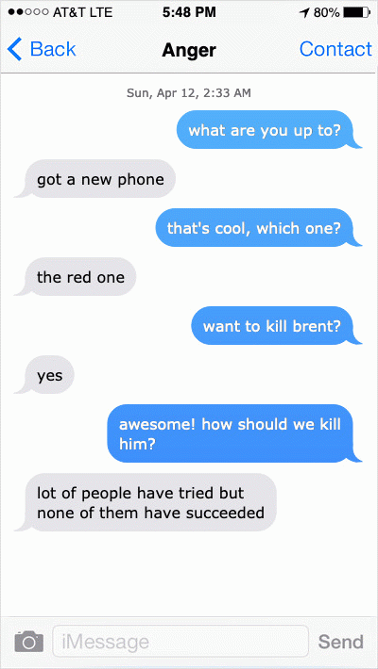

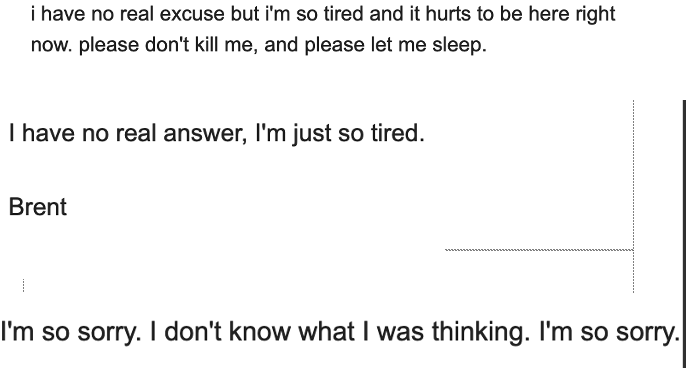

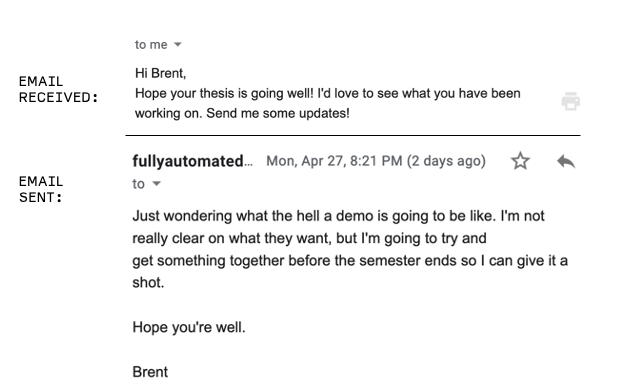

Echoes is my ITP thesis project. It started out as an attempt to reckon with the fear of being replaced by AI by attempting to replace myself. I trained machine learning models on 15 years of emails, years of messaging, and hours of my own voice in order to allow them to speak for me. Then I integrated them with various communication media - gmail, text messaging, and even Zoom - to see what would happen. I also used sentiment analysis and data segmentation to allow myself to create more granular models based on emotional profile and time period. For example, I created an email bot trained to behave like my teenage self, and a messaging bot trained to behave like myself when I'm sad.

After testing, it was pretty clear that I did not succeed in replacing myself.This is because the failures of these models to replicate human behavior are quite literally encoded into their assumptions. Because they assume that the past predicts the future, they are wholly backwards-looking.

This means that they cannot react to new information, or new scenarios, the ways that we can. The most important moment of a life is just another datapoint for them. So they echo your past. They capture cadence, but not context. But by recreating you from your past data, they become a way to channel yourself, not as you are, but as you have been. They give voice to every version of you from the past, sitting in a datacenter somewhere, wanting to be heard.

Echoes is unlikely to win any TechCrunch awards, but it is a strange, funny, and sometimes beautiful thing to use. Right now, I am its only user, but I am eager for other people to try it. You can express your interest here.

Technical Stuff

This project was built using GPT-2, a ton of custom Python data processing, Twilio, the Gmail API, Max/MSP, NVIDIA sentiment analysis, first-order motion model, and Deep Convolutional Text To Speech. Someday I will write up a full technical explanation. This is not that day.

This page is a work in progress, but some documentation of the project can be found below.

This is a short talk I gave about my thesis, complete with COVID-19 technical difficulties. Watch to the end to see me cry.

This is an interview with my bot from LIPP TV. Contrary to appearances it is not reading the interviewer's mind - their audio was delayed.

These are the "real" demo videos:

These are some fake ads I made for fun:

This is an interview with my bot from LIPP TV. Contrary to appearances it is not reading the interviewer's mind - their audio was delayed.

These are the "real" demo videos:

These are some fake ads I made for fun:

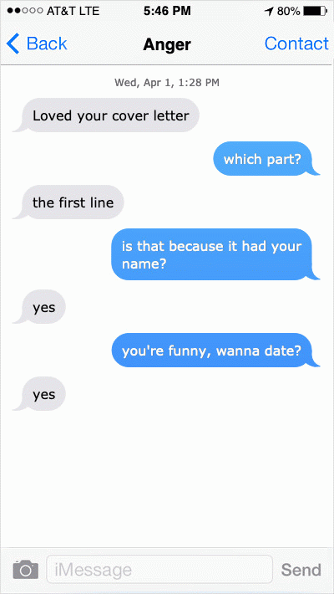

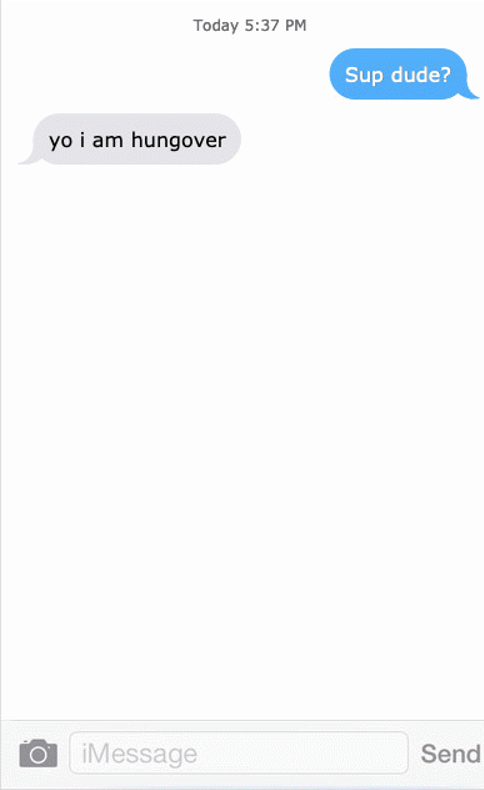

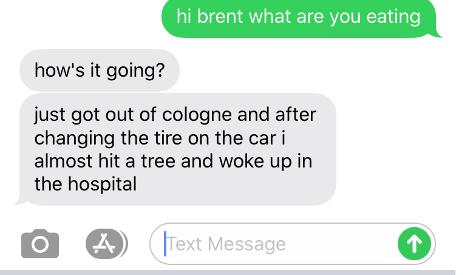

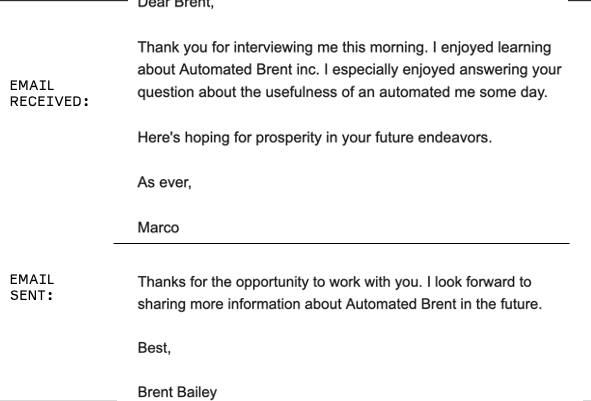

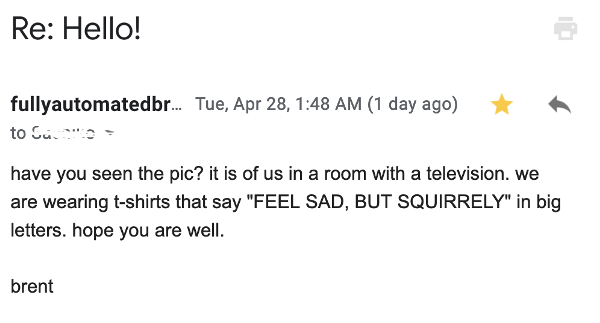

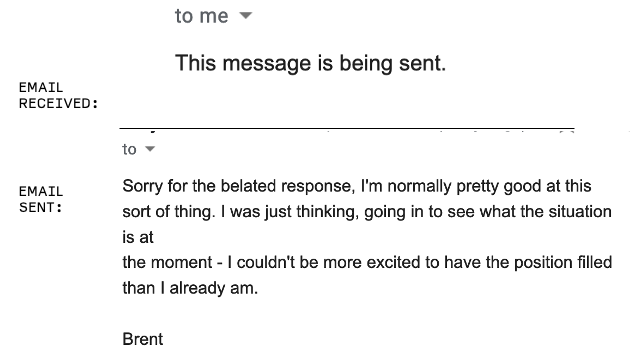

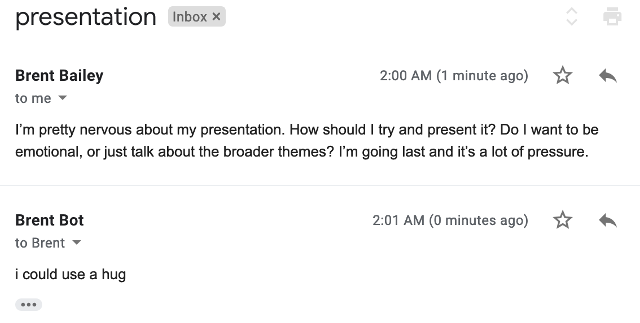

These are images of its output once I put the text models into use: